Ok so for a long time now I’ve wanted to set up Nginx Proxy Manager (NPM). I’d seen a multitude of youtube videos walking through it as well as wanting to see if I could get it done. Today was the day I set about setting it up. The actual set up took me literally 10 minutes, but trouble shooting it, well that’s another story! For one reason or another, I couldn’t get it to work initially, eventually after a lot of trial and error, I got it to work. I’ll post my findings below and hopefully this will help clarify things. I wasn’t able to find a solution online or clarity on what the actual error is, so it might not help everyone, but I bet it helps a good proportion of you! Let’s get started.

Inital set up

I didn’t want to go opening up my local machine for experimentation so I headed over to Digital Ocean and created a free account there. I used someone else’s referral link and got $100 credit (2 months to use it) so I thought i’d experiment there. I’d appreciate it if you used my referral link here if you wish to set up an account there. It won’t cost you a dime, but helps me produce more content.

I set up a Docker droplet (basically a server running Ubuntu 20.04 LTS with Docker and Docker Compose already installed).

I chose the cheapest and simplest options. Didn’t bother adding storage, the initial 25gb was more than enough for my testing purposes.

I chose to add SSH keys via puttygen, but you can use a password for SSH if your more comfortable. One thing I also suggest is that you choose the hostname. On my first attempt at this, I was given a long hostname which was really annoying to look at when in your SSH terminal. Create Droplet and you’re done. Wait for a few moments and soon you’ll be given the ip address of the server.

Next was time to SSH in and run the updates. I like to use MobaXTerm, using the command SSH root@the.new.ip.address got me into the server. Next I ran:

sudo apt-get update && apt-get upgrade -y

This went through the various update procedure, once completed, it was time to create a docker compose file.

I decided to place this in the /opt/ folder, so as I was in home… it was a case of running the following:

cd ..

cd opt/

mkdir nginxproxymanager

cd nginxproxymanager

nano docker-compose.yaml

Here’s where we paste in our code:

version: ‘3’

services:

app:

image: ‘jc21/nginx-proxy-manager:latest’

restart: unless-stopped

ports:

– ’80:80′

– ’81:81′

– ‘443:443’

environment:

DB_MYSQL_HOST: “db”

DB_MYSQL_PORT: 3306

DB_MYSQL_USER: “user”

DB_MYSQL_PASSWORD: “somethinglong”

DB_MYSQL_NAME: “npm”

volumes:

– ./data:/data

– ./letsencrypt:/etc/letsencrypt

db:

image: ‘jc21/mariadb-aria:latest’

restart: unless-stopped

environment:

MYSQL_ROOT_PASSWORD: ‘somethingreallylong’

MYSQL_DATABASE: ‘npm’

MYSQL_USER: ‘user’

MYSQL_PASSWORD: ‘somethinglong’

volumes:

– ./data/mysql:/var/lib/mysqlnetworks:

default:

external:

name: nginxproxymanager_default

Obviously change the passwords and the user as needed. Be mindful that whatever you change at the top in NPM, you’ll need to change in the bottom for the database to match. We add a default network. Any docker container you wish to run through NPM you’ll need to ensure it’s on the same network. There are various ways of accomplishing this, I just chose to use the default network for the NPM stuff.

Ctrl+o, Y, Ctrl+x and you should be back in the terminal. Now we need to run the command to pull the images. Make sure you’re in the same folder as the docker-compose.yaml file we made above and run the following:

docker-compose up -d

Let it do it’s thing. Depending on your internet connection it will probably take a minute or so to pull, extract and run everything. Assuming all is well, you should be able to run the following command to see the containers running:

docker ps

So far so good, let’s leave this now and go and set up the domain options.

Cloudflare

Create an account at cloudflare.com

Click the Add site button at the top and type in the name of your domain.

Once done, cloudflare will ask you to change the name servers on the domain itself. For example, I have domains at Ionos.com so I logged into my account there and then amended the name servers to what cloudflare wanted (both primary and secondary servers). Once done, you need to wait a while for the record changes to propagate. In my example, I used a spare domain I have called rafflemove.com

Click on DNS at the top, here you should make sure that the domains are all pointing specifcally at the IP address Digital Ocean provided us with earlier:

I amended the A records for rafflemove.com, www,* and ensured they were all pointing at my digital ocean IP. I also added a couple of A records.

I added music and nextcloud. Initially it will show us proxy status proxied. If you click on this you can turn it off for DNS only. I switched both of these to DNS only initially for the set up. I’d read online that using proxied could make things harder to set up. Am not sure if this is true, but I went with DNS only initially (note you just click on the proxied toggle below to move to DNS only).

Next I clicked on SSL/TLS and made sure it was on FULL and switched on the SSL/TLS recommender.

At this point we were done with Cloudflare.

Creating the application container

Before we set up Npm, let’s create the container that we want to access online.

I chose to set up airsonic and nextcloud. Normally I would use a docker compose file, but in order to keep this short, let’s set up the containers with the bare minimum of options. We don’t want to expose any ports on either, as we’ll be accessing them via music.rafflemove.com and nextcloud.rafflemove.com respectively. I also installed Portainer so that I was able to check logs etc and make sure everything was running as it should (note I didn’t want portainer to be exposed via NPM, so I let it sit on its own default network and exposed ports as normal.

docker run -d -p 8000:8000 -p 9000:9000 –name=portainer –restart=always -v /var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data portainer/portainer-ce

docker run –network nginxproxymanager_default -d airsonic/airsonic

I won’t cover nextcloud installation here to keep this brief, but after 5 minutes, I had all containers happily running in Portainer. Portainer was reachable at your.server.ip.address:9000, but the others weren’t due to us not exposing any ports for them. You can use Portainer though to double check both Nextcloud and Airsonic were both on the nginxproxymanager_default network and running.

Nginx Proxy Manager

Returning to our machine, let’s set up NPM properly.

Navigate to the your.servers.ip.address:81

Login with the user admin@example.com and the password as changeme. Change those as necessary. I then logged out and logged back in with the new credentials.

Then click on the host tab and add a Proxy Host. Fill in as below:

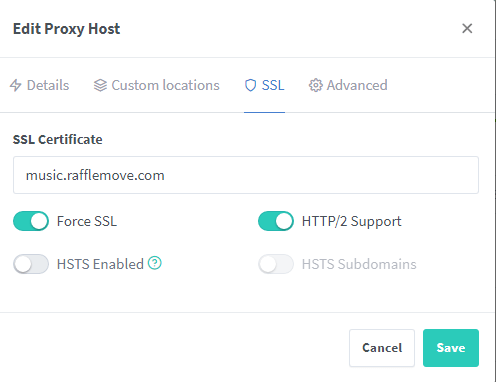

On the SSL certificate, you need to select

Request a new SSL certificate.

Once done, fill in the rest as below. Hit save and with any luck the status should turn green / Online. To test I clicked on the button music.rafflemove.com With any luck you’ll be greeted with the login page of Airsonic. If you are, all that’s left to do is rinse and repeat the process with nextcloud and any other containers you want exposed.

If for whatever reason you aren’t able to access the container, check the container is actually running in portainer (ask me how I know :)) If it’s not, start it (it might be a good idea to set it to restart- always so that the container will fire up whenever the server does.

Wildcard Certificates

In order to make the above even simpler in NPM, we can create what is known as a wildcard certificate. (Thank you to Matthew Petersen for pointing this out – he has a great Facebook group called Dockerholics). Essentially we’re going to bridge Cloudflare to NPM via Cloudflare’s API. This will allow you to essentially use the same certificate (*.rafflemove.com) instead of creating one for every subdomain as above. Also want to thank “Bist” for his walkthrough instructions that helped me do this quick and easily.

Go to Cloudflare.com and click on your domain name. It will bring you to the main page with some graphs and “Quick Actions” at the top on the right. Half way down on the right you’ll see API Zone ID and Account ID. Under that you need to click Get your API token.

Next Create Token (at the top)

Create Custom Token (at the bottom) => Get Started

Add a name, and then for Permissions, it needs to be Zone / DNS / Edit. => Continue to summary.

Create Token

Copy the token out. We’ll need this for NPM. Now go to the admin console of NPM (your.server.ip.address:81) and click on SSL. Add a Let’s Encrypt certificate and fill it out as below.

Remember to paste in your token we just created into the box where appropriate. Save that and you’re done. Now every time you add a subdomain, when you click on the SSL cert, you can just request one from *.yourdomain.com (or *.rafflemove.com in my case). If you have a large installation this will be much cleaner and easier to manage when it comes to renewing or replacing etc.

502 Gateway Error and other Considerations

Now for several hours, for one reason or another, I was unable to get this to work. I was being coninually faced with 502 Gateway errors. I can’t tell you the number of times I deleted the A record on cloudflare, or deleted the proxy host only to try and redo the configuation. I watched Youtube video after Youtube video and read several blog posts including the official documentation. None of the sources seemed to answer why it wasn’t working.

In the docs it suggests that the main common reason for 502 Gateways could be mistakes in the configuation due to incorrect HTTP / HTTPS settings or SSL not working. What I couldn’t find anywhere were the following simple tips:

-

When you configure the Proxy Host you still need to add the container port, even if you’re not exposing the ports in portainer (nearly every video or blog post just shows port 80 being shown. This didn’t work for things like Node-red or Airsonic (they required 1880 and 4040 respectively).

-

I needed to set the scheme to http and NOT https. I was able to grab the SSL certificates without issue and always intended to use HTTPS on the actual site i.e. https://music.rafflemove.com so naturally I selected https as the scheme in the proxy host. This would not work no matter how I tried it. Using http as the scheme fixed this immediately.

-

By not exposing ports to the containers in your docker stack you are not able to get into the container without using NPM. i.e. in the case of Airsonic, the only way I can navigate to that container is to use music.rafflemove.com, I am unable as per the set up above to use my.servers.ip.address:4040.

-

I am unable to access portainer on rafflemove.com:9000 only via my.servers.ip.address:9000

Proxied DNS

The only thing left to do was to go back to Cloudlare.com and to turn on “proxied” on the subdomains. This in essence obscures the IP address from view, affording you more security. As I alluded to earlier in the post, am not sure if you could have done this initially, but I did it at the end. YMMV

Summary

This was a challenge that had been waiting for me for a while. Personally, I don’t think it’s something that I will run on my main server. I am not so keen with the idea of keeping my services exposed to all and sundry on the internet. That said, I can definitely see the attraction for some people. Just be sure to keep passwords extremely long and hard to guess. For me, I am still in love with Tailscale. If you’ve never seen tailscale, take a look at my blog post here. If I want remote access to any containers, I would sooner go this route. In essence it was easier to set up, with no portforwarding necessary and in my opinion, I have more layers of security. I.e. You’d need to authenticate via Github (2fa) to join my tailscale network, then you would need to know the password and ip address of that machine on the network.

Having said that, for sharing a music or game server between friends, this would do nicely.

I’ve only just scratched the surface with Nginx Proxy Manager. I’m clearly not a ‘Dev so take the tutorial with a pinch of salt, especially if I’ve used the wrong terminology. Not only did I want to see if I could get it working, but I also wanted to give Digital Ocean a try. I was pleasantly surprised how easy it was to get up and running, especailly they’re implementation of SSH keys. If you fancy having a free play, then please consider using this referral link.

If anyone else has got any other ideas or suggestions concerning Nginx Proxy Manager, pop them in the comments, or in our facebook group.

https://www.facebook.com/groups/386238285944105

If you’re considering a renovation and looking at the structured wiring side of things, or maybe you just want to support the blog, have a look below at my smarthome book, it’s available in all the usual places (including paperback)!

perfect sir.. Nice one

Glad it was useful

You saved me from the 502 Gateway error! Thanks!

No worries. Glad it fixed you up!

I’ve seen similar tutorials around, but this is definitely the better – organized, straightforward, complete and approached the most common issue of 502. Thanks and all the best for you Andrew!

Thank you for the kind words. I always try not to leave any gaps!

do the users and passwords have to match or can they be all different?